Acknowledgements

This work was created with extensive collaboration from technical consultant Vitorio Miliano at Tertile, LLC. Vitorio contributed to this project end to end, including compiling the extensive lists of alternatives to ElevenLabs and Wonder Studio, as well as troubleshooting a majority of the character creation workflows and making the solutions lay-accessible.

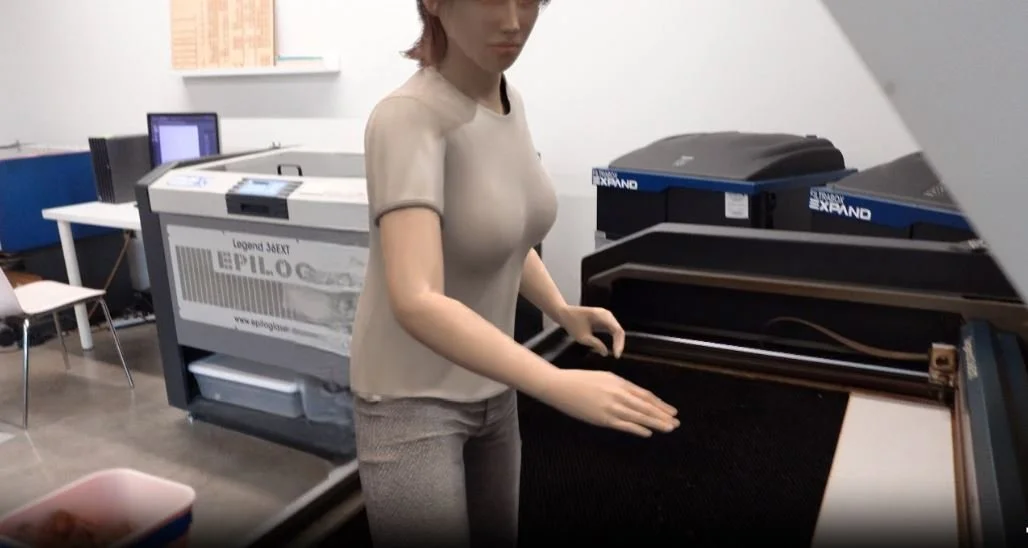

I extend my appreciation to everyone at MakeATX for their participation in the redaction video demo, without which this project wouldn’t have been possible. MakeATX is a laser cutting workspace that offers classes as well as custom projects, and everyone we worked with was wonderful.

I’m also indebted to Uday Gajendar, Lou Rosenfeld, Nathan Gold, and my fellow demo presenters at Rosenfeld’s Designing with AI conference: Jorge Arango, Bryce Benton, Yulya Besplemennova, Trisha Causley, and Fisayo Osilaja. Thank you also to my colleagues Dr. Diego Castaneda, Kristin Johnson, Keira Phifer, Sara Telfer, and Leslie Waugh for helpful discussions and feedback.

Many thanks to Nikola Gaborov at Wonder Dynamics for assistance with character troubleshooting.

If you would like to use custom characters in Wonder Studio, you may wish to consider hiring a dedicated contractor. We spoke briefly with Scott M. (Upwork), Olayemi S. (Upwork) and Chatamodel (Fiverr), although we did not end up engaging their services for this project.

Thanks also to Dr. Shuai Yang for use of a still frame generated by Rerender A Video.

Resources

Below are resources and tools used in the course of this project.

Biometric privacy laws

Audio tools

Video tools

Image tools

Tutorials and guides for Character Creator 4 and Wonder Studio conversion

S-Lab License 1.0 (for Rerender a Video ONLY)

Copyright 2023 S-Lab

Redistribution and use for non-commercial purpose in source and binary forms, with or without modification, are permitted provided that the following conditions are met:

Redistributions of source code must retain the above copyright notice, this list of conditions and the following disclaimer.

Redistributions in binary form must reproduce the above copyright notice, this list of conditions and the following disclaimer in the documentation and/or other materials provided with the distribution.

Neither the name of the copyright holder nor the names of its contributors may be used to endorse or promote products derived from this software without specific prior written permission.

THIS SOFTWARE IS PROVIDED BY THE COPYRIGHT HOLDERS AND CONTRIBUTORS "AS IS" AND ANY EXPRESS OR IMPLIED WARRANTIES, INCLUDING, BUT NOT LIMITED TO, THE IMPLIED WARRANTIES OF MERCHANTABILITY AND FITNESS FOR A PARTICULAR PURPOSE ARE DISCLAIMED. IN NO EVENT SHALL THE COPYRIGHT HOLDER OR CONTRIBUTORS BE LIABLE FOR ANY DIRECT, INDIRECT, INCIDENTAL, SPECIAL, EXEMPLARY, OR CONSEQUENTIAL DAMAGES (INCLUDING, BUT NOT LIMITED TO, PROCUREMENT OF SUBSTITUTE GOODS OR SERVICES; LOSS OF USE, DATA, OR PROFITS; OR BUSINESS INTERRUPTION) HOWEVER CAUSED AND ON ANY THEORY OF LIABILITY, WHETHER IN CONTRACT, STRICT LIABILITY, OR TORT (INCLUDING NEGLIGENCE OR OTHERWISE) ARISING IN ANY WAY OUT OF THE USE OF THIS SOFTWARE, EVEN IF ADVISED OF THE POSSIBILITY OF SUCH DAMAGE.

In the event that redistribution and/or use for commercial purpose in source or binary forms, with or without modification is required, please contact the contributor(s) of the work.