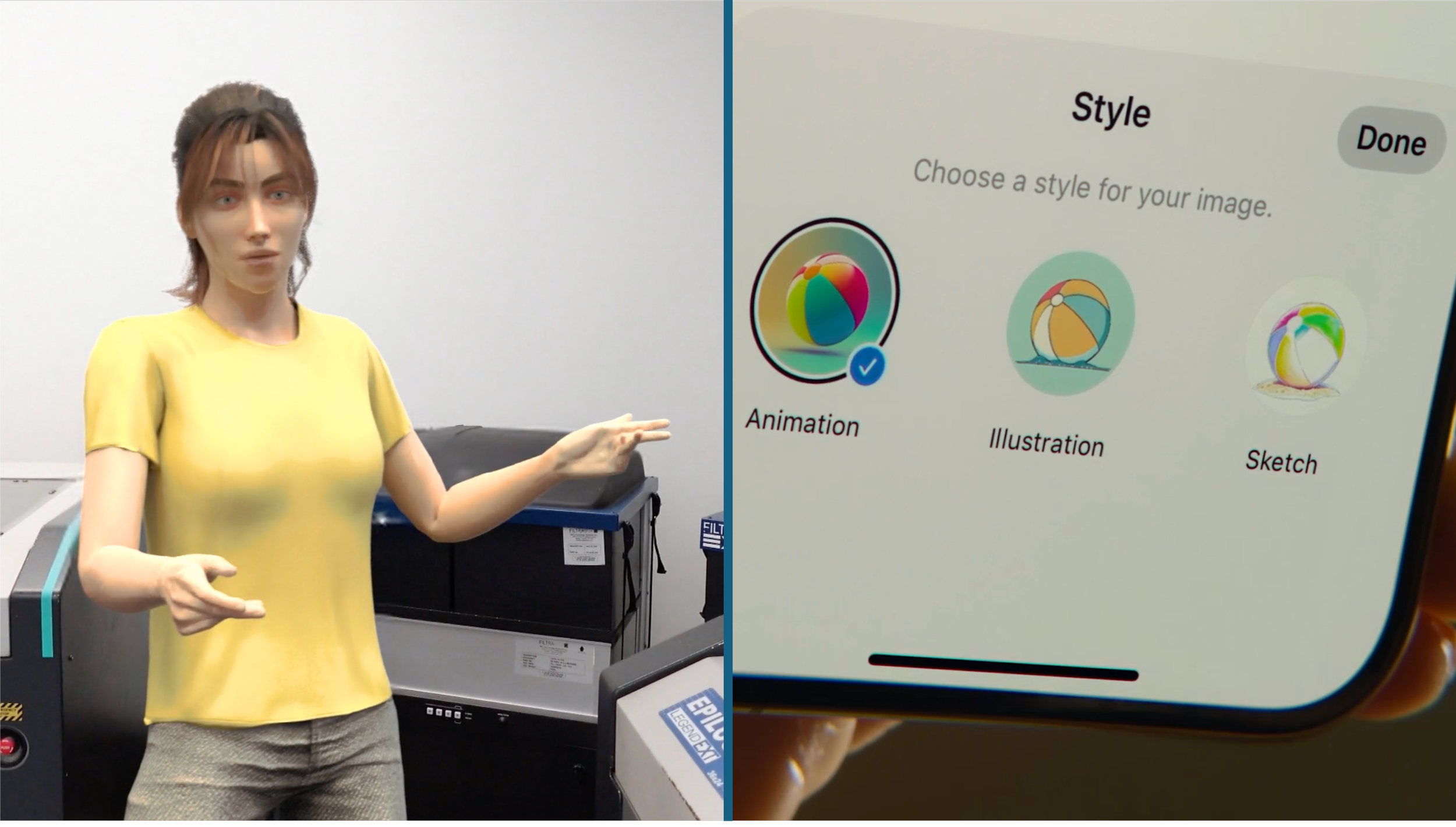

For AI image generation and video redaction, stylized imagery can communicate what’s real and what isn’t

In a recent blog post, Simon Willison highlighted Apple’s “ethical approach to AI generated images?” with Image Playground. His arguments are very similar to our case for using avatars to de-identify users in research recordings.

From Willison’s post:

This feels like a clever way to address some of the ethical objections people have to this specific category of AI tool:

- If you can’t create photorealistic images, you can’t generate deepfakes or offensive photos of people

- By having obvious visual styles you ensure that AI generated images are instantly recognizable as such, without watermarks or similar…

At Rosenfeld Media’s 2024 Designing with AI conference, we demonstrated replacing a user's entire body with a cartoonish avatar to preserve their privacy. But why not just replace their face using deepfake technology?

We decided on this approach for similar reasons to the points raised by Willison.

Using more realistic redaction in recordings may be more compelling to stakeholders, but it also creates the potential for abuse or deception, even if unintended.

By using an avatar that is instantly recognizable as such, it makes it clear to viewers what is real, and what has been redacted. This is valuable not only from a legal perspective (making it easier to verify that recordings *have* been redacted), but also from the perspective of research veracity.

When conducting and reporting research, every aspect of the user’s appearance and context can be meaningful. We don’t want part of the redaction to be interpreted as meaningful when it isn’t.

Using avatars for redaction offers a way to communicate what’s real and what isn’t without entirely obscuring facial expressions and bodily movements.

It’s exciting to see Apple taking a similar approach with Image Playground.