Nobody wants to be their team’s AI “speedbump,” even if it’s better for the company and their users. Here’s what World Usability Day speakers recommended in this situation.

Read MoreHappy World Usability Day! I’m presenting on AI Privacy & Trust at the Austin event through UXPA Austin.

Read MoreLast year Vitorio Miliano and I built a demo using AI to redact biometric data from user research videos. Vitorio built a new, expanded, agent-based version that: 1) runs entirely locally, 2) “understands” entire scenes using a perceptive-language model (Perceptron's Isaac).

Read MoreRevisit Designing with AI 2025 with a new Rosenverse playlist. Our focus at DwAI25 was twofold: sharing AI best practices, and looking ahead to the future of design with new AI use cases. In the five months since the conference, we've seen AI and UX evolve rapidly, but the guidance of our DwAI25 speakers remains relevant.

Read MoreI recently debuted a new talk on AI best practices for research. Here are the slides that got the biggest reaction.

Read MoreWe tested Google’s Gemini 2.5 Flash (used in NotebookLM) on qualitative analysis by running the same prompt multiple times. It improved on GPT-4o and Claude Sonnet 3.5 at quote accuracy but still struggled with relevance and meaning.

Read MoreAlex Allwood is one of my past AI+research workshop attendees, and she’s written up a great account of her learnings.

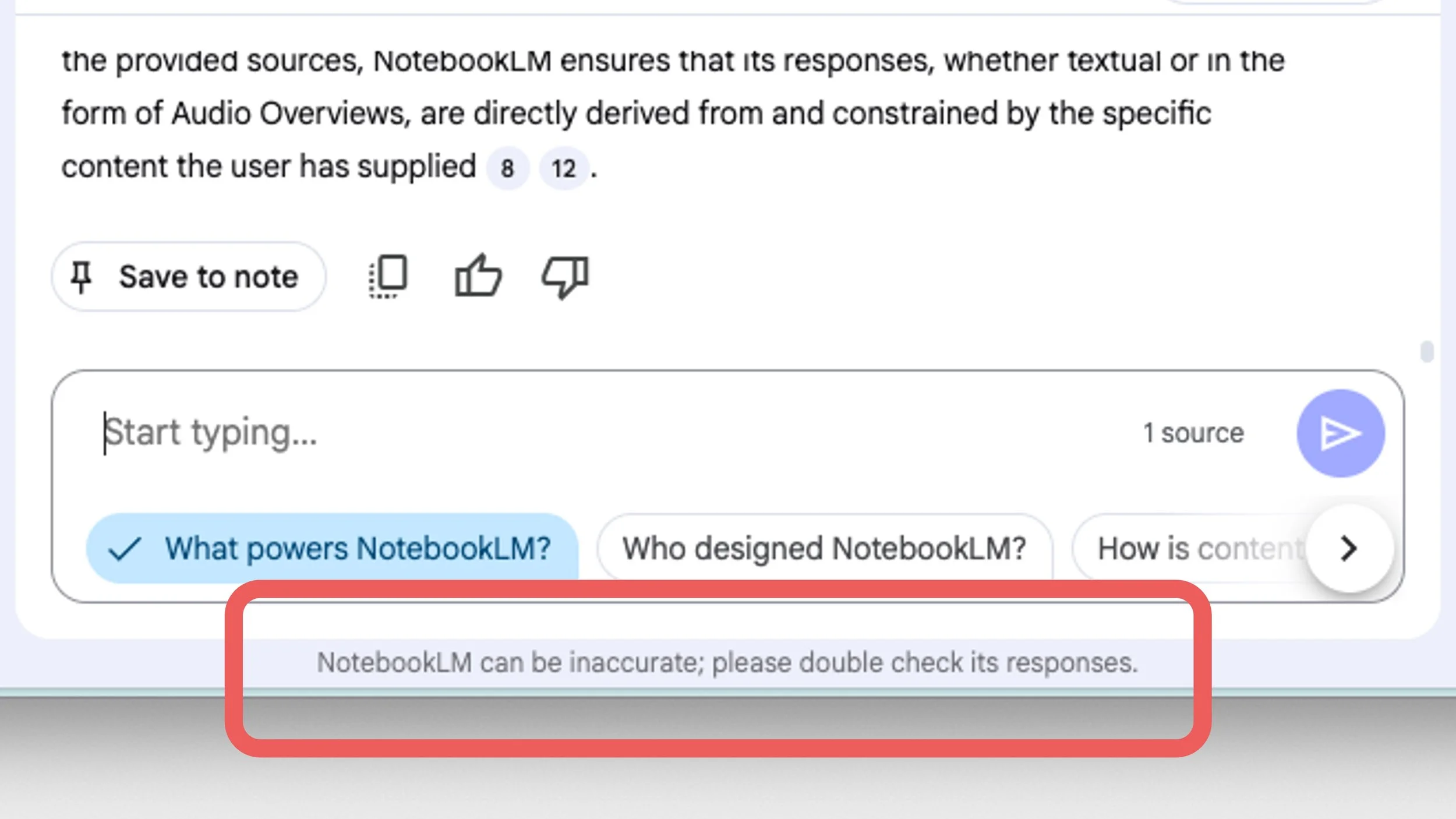

Read MoreNotebookLM is useful, but it’s still just LLMs deep down. There’s no magic technology that makes it immune to the problems of other AI systems.

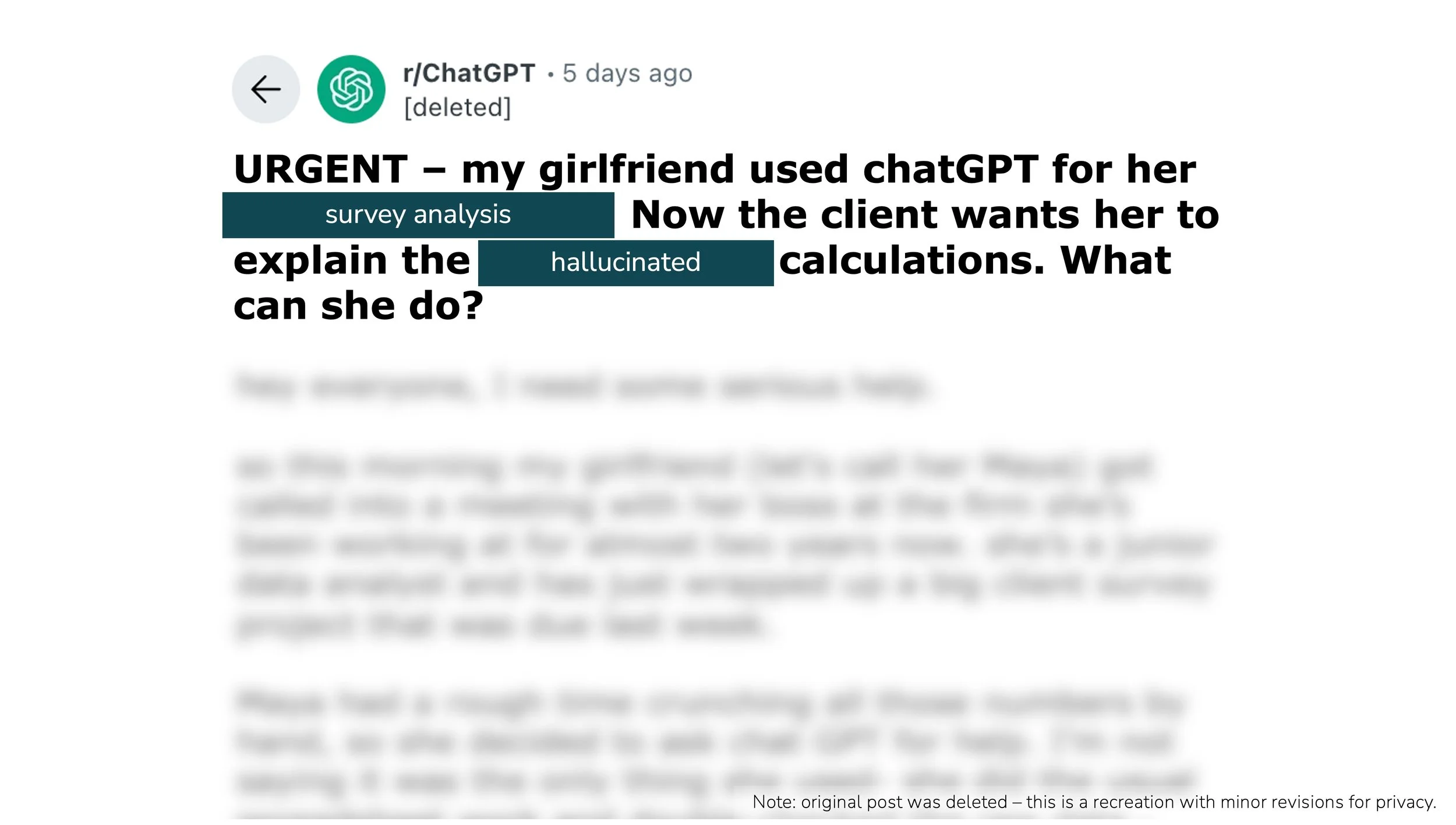

Read MoreSeen last week on social media: a market researcher trying to save their job after reporting a nonsense, LLM-generated analysis of survey data. It’s a common mistake, and there’s not much training available for researchers on how to account for differences between generative AI and traditional research tools.

Read MoreThe reality is that many researchers are now expected to use LLM-based tools in their work, despite their shortcomings. And it can be hard to push back on mandates from above, or competition from peers who don’t understand the risks. Are there ways we can integrate LLMs into research while still being responsible professionals about it?

Read MoreWhen we use AI tools in product design, strategy, and user research, we need to keep something important in mind: the AI is not “understanding” our requests, and even with safeguards, it may not catch when it’s making mistakes.

Read MoreError-checking AI output takes time, so we need to be wary of claims of “time saved” using AI for product research. A thought-provoking quote by Emily M. bender from the March 31 episode of her Mystery AI Hype Theater 3000 podcast underscores this.

Read MoreSiloed work leads to blind spots in AI feature design–you need more diverse perspectives. A story from early in my career illustrates why this requires a new level of partnership between UX and engineering.

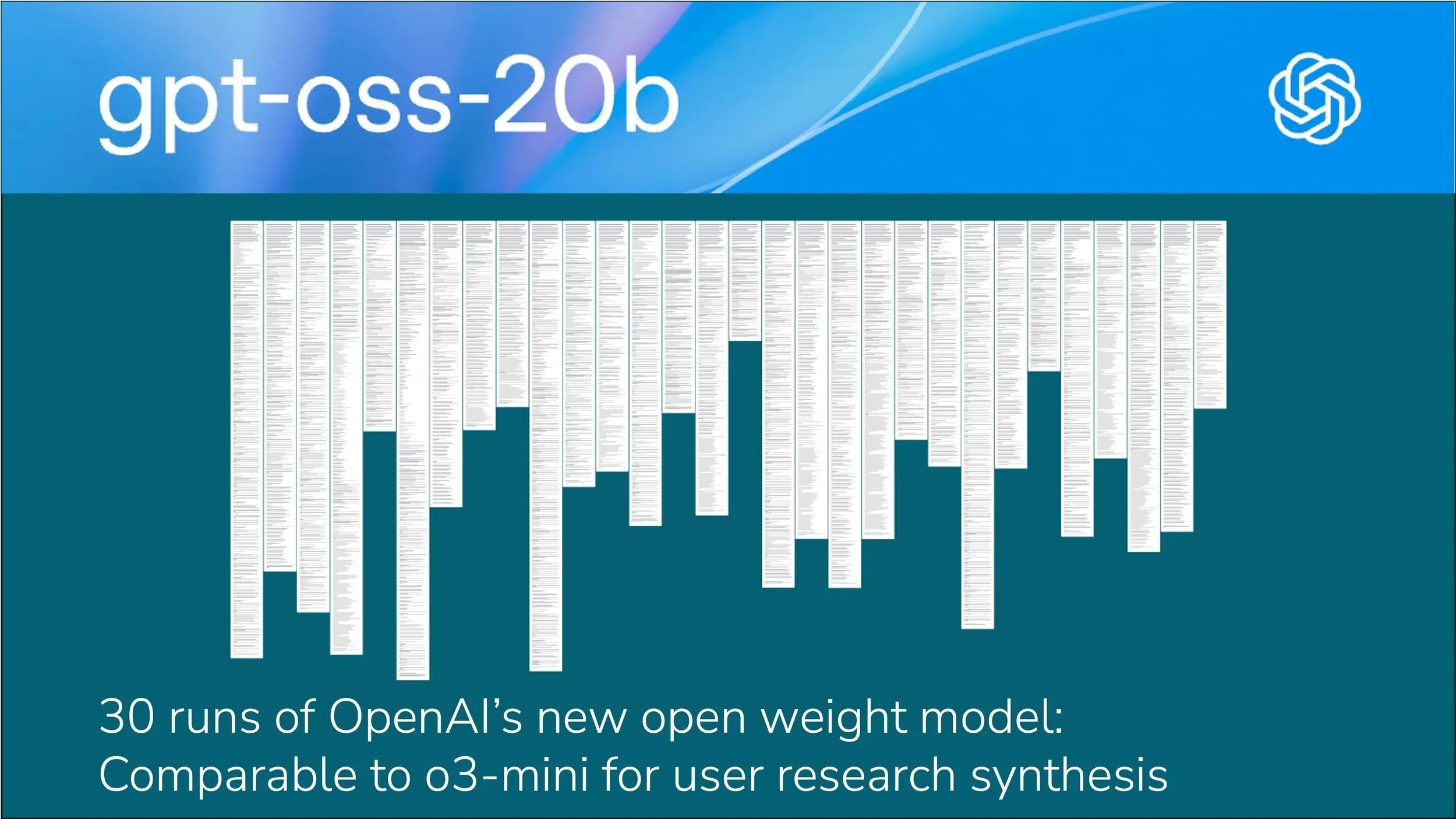

Read MoreSince it launched, I’ve been testing how well gpt-oss-20b does at qualitative research synthesis. I’ve been able to run dozens of giant, 52K-token prompts on my standard-issue MacBook Pro in just a minute or two apiece, and the results have been surprisingly good overall–albeit, with the same egregious errors that we’ve come to expect in LLM citations.

Read MoreThere’s a widely circulated piece of bad advice when it comes to AI: “If you don’t know how to use AI, just ask the AI!” The problem with this advice is that it assumes the AI “knows” about its own functioning. Spoiler alert: it doesn’t! (But it is good at making up plausible sounding answers!)

Read MoreResearchers should be in the driver's seat when it comes to AI tools, and that means understanding their tradeoffs just like we do with traditional research methods. My AI for UX Researchers workshop is not about teaching you to use any one specific AI tool–it's about giving you the skills to evaluate the performance of any AI model or service critically.

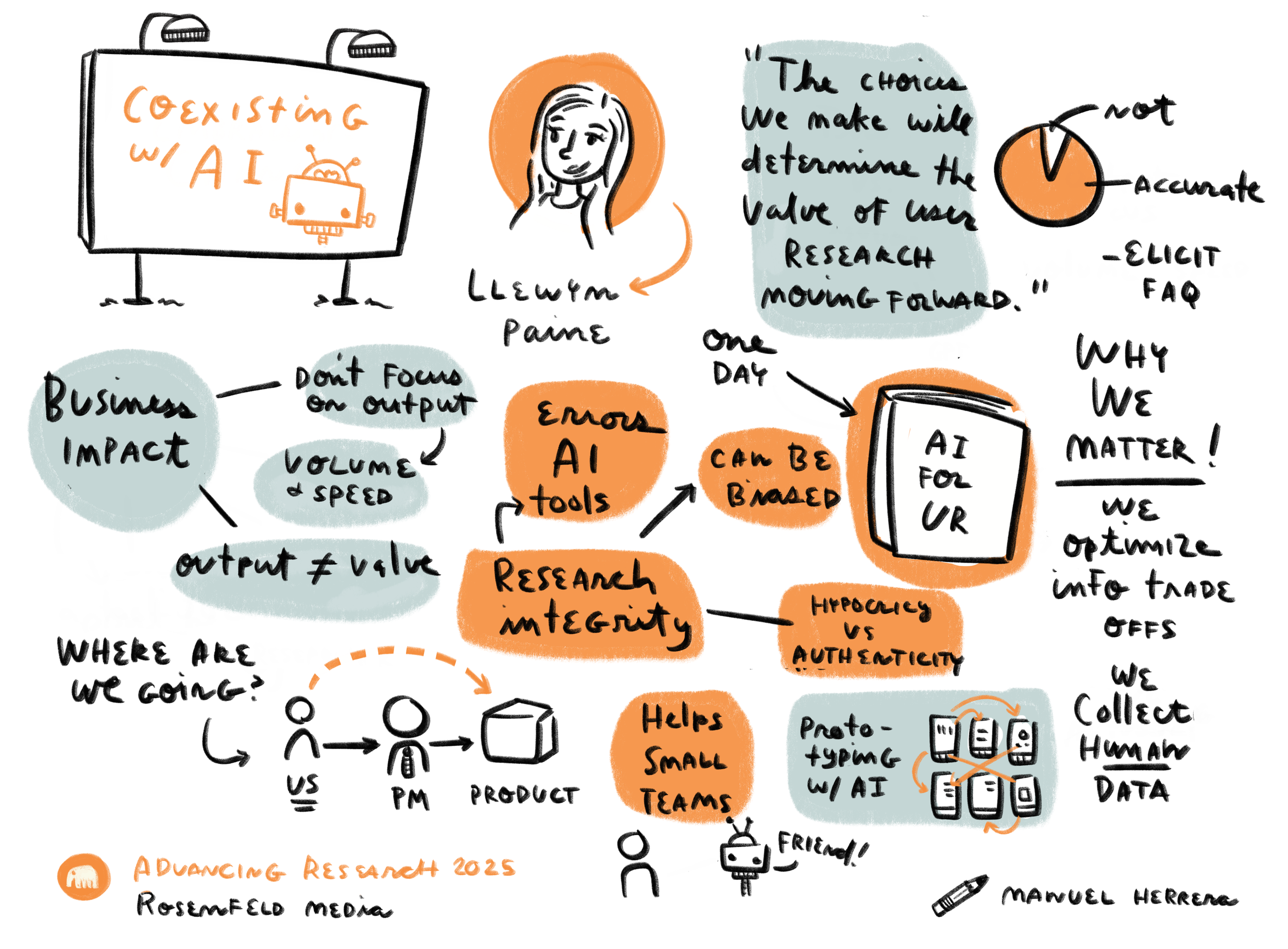

Read MoreThere’s a pressing need to measure AI adoption success not in terms of output, but outcomes. A lot has changed since I gave this talk on “Coexisting with AI” at Rosenfeld Media’s Advancing Research in March, but this core principle hasn’t.

Read MoreLast week, I presented to the UX research community at the Library of Congress. I shared five ways that UX roles are evolving with AI, from process changes to entirely new capabilities.

Read More