Last year Vitorio Miliano and I built a demo using AI to redact biometric data from user research videos. Vitorio built a new, expanded, agent-based version that: 1) runs entirely locally, 2) “understands” entire scenes using a perceptive-language model (Perceptron's Isaac).

Read MoreVideo data and person detection are important for a growing number of IoT/smart device applications, but many existing approaches require raw video to be transmitted into the cloud before biometric features can be redacted. Iravantchi et al. have found a new way to redact humans from videos before any video data is uploaded, or even stored on the device itself.

Read MoreAman Ibrahim created TerifAI (as in “terrify”), a bot that can clone your voice after only a minute or so of conversation. It’s an incredible demonstration of the rapid development (and growing threat) of voice cloning AI, and an example of why the need for biometric voice redaction is becoming more urgent.

Read MoreAs part of a recent biometric data redaction demo, we used ElevenLabs to replace the voices of participants in recordings with AI-generated speech. Now ElevenLabs has announced new voice models based on classic actors. This raises new questions about trade-offs between participant privacy and stakeholder perceptions.

Read MoreManuel Herrera did this fabulous sketchnote version of my AI doppelgangers demo at Designing With AI 2024. It's a concise summary of the limited biometric data redaction options available for recordings of humans today, and of some immediate issues with the AI tools that could take over this task in the future.

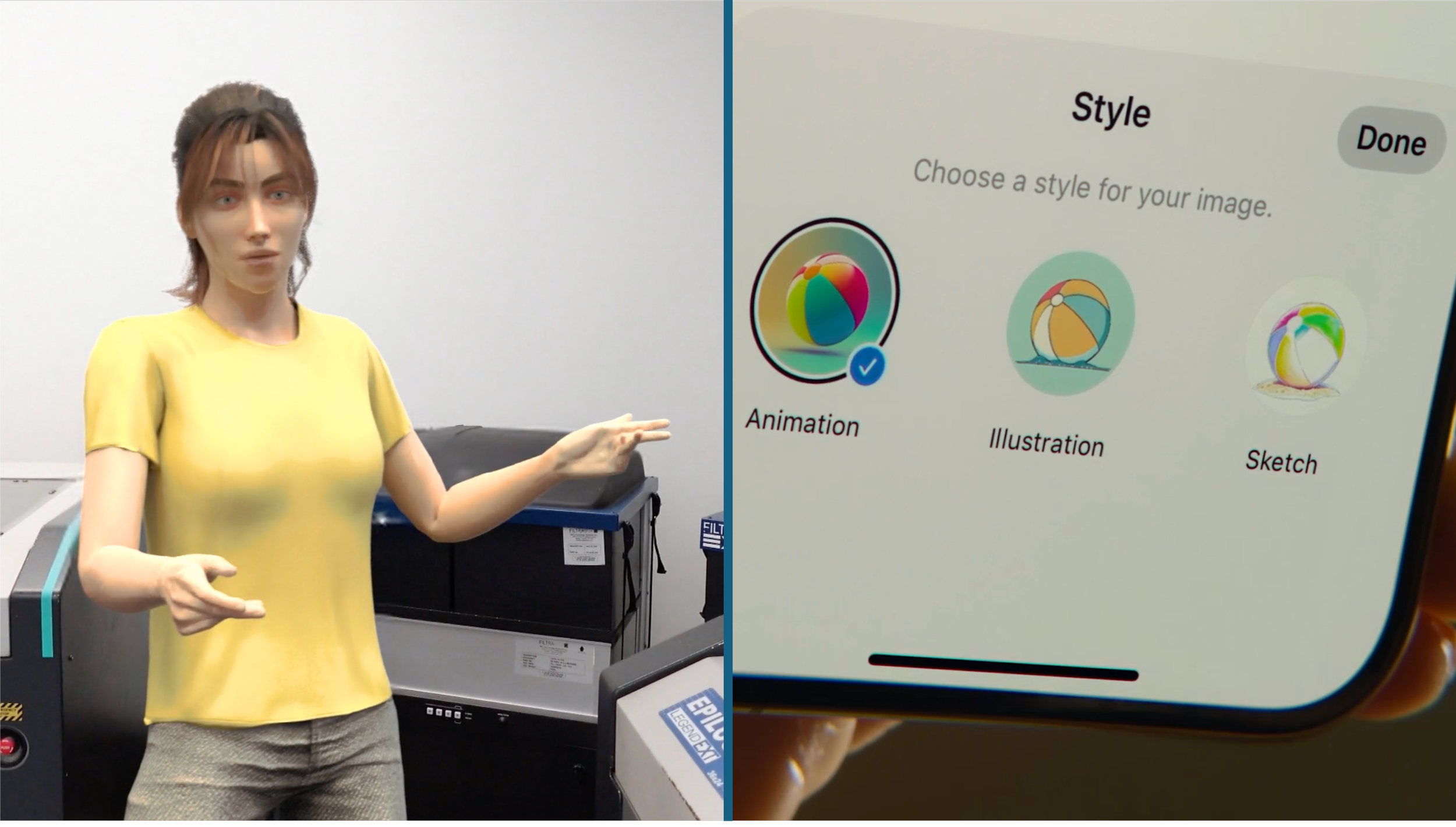

Read MoreIn a recent blog post, Simon Willison highlighted Apple’s “ethical approach to AI generated images?” with Image Playground. His arguments are very similar to our case for using avatars to de-identify users in research recordings.

Read MoreWhat if you could eliminate data privacy concerns with your user research videos, security footage, etc., by automatically removing faces and voices? This project showcases a way to use AI to protect individuals’ privacy rather than threaten it.

Read MoreProduct teams are being told they can't keep research recordings they need because of privacy laws. This is a solvable problem, but the solution we identified requires legal, audio and video editing, and 3D art domain knowledge in addition to research know-how.

Read MoreA recently passed bill in Colorado classifies brain waves as sensitive personal information that must be protected the same as fingerprints and facial recognition data. Most (though not all!) user research doesn’t collect neural data, but we should be prepared for legislation that restricts our ability to collect and store user data in unanticipated ways.

Read MoreTarget is the latest company to be hit with a class action lawsuit for biometric privacy violations–specifically facial recognition data. Legal departments are growing concerned that user research recordings present a similar risk.

Read More