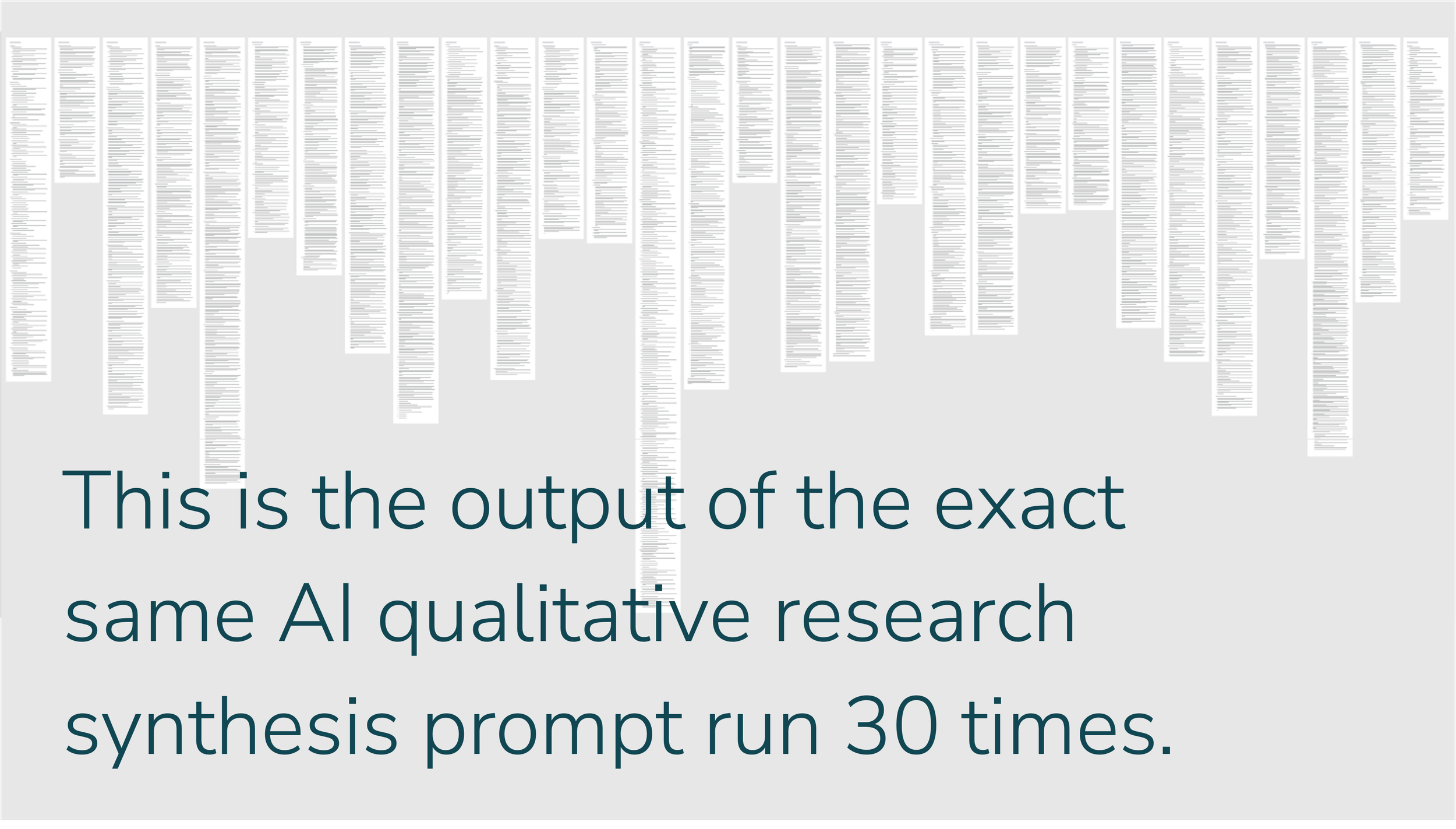

What happens when you run the same AI qualitative research synthesis prompt 30 times?

I invited 31 researchers to test AI research synthesis by running the exact same prompt. They learned LLM analysis is overhyped, but evaluating it is something you can do yourself.

Last month I ran an AI for User Research workshop with Rosenfeld Media, with a great first cohort of smart, thoughtful researchers.

A major limitation of a lot of AI for UXR “thought leadership” right now is that too much of it is anecdotal: researchers run datasets a few times through a commercial tool and decide whether or not the output is good enough based on only a handful of results.

But for nondeterministic systems like generative AI, repeated testing under controlled conditions is the only way to know how well they actually work. So that’s what we did in the workshop.

Our workshop participants produced a lot of interesting findings about qualitative research synthesis with AI:

LLMs can produce vastly different output even with the exact same prompt and data. The number of themes alone ranged from 5 to 18, with a median of 10.5.

Our AI-generated themes mapped pretty well to human-generated themes, but there were some notable differences. This led to a discussion of whether mapping to human themes is even the right metric to use to evaluate AI synthesis (how are we evaluating whether the human-generated themes were right in the first place?).

The bigger concern for the researchers in the workshop was the lack of supporting evidence for themes. The supporting quotes the LLM provided looked okay superficially, but on closer investigation every single participant found examples of data being misquoted or entirely fabricated. One person commented that validating the output was ultimately more work than performing the analysis themselves.

Now, I want to acknowledge that this is one dataset, one prompt (although, a carefully vetted one, written by an industry expert), and one model (GPT 4o 2024-11-20). Some researchers claim that GPT 4o is worse for research hallucinations–and perhaps it is–but it is still a heavily utilized model in current off-the-shelf AI research tools (and if you’re using off-the-shelf tools, you won’t always know which models they’re using unless you read a whole lot of fine print).

But the point is–I think this is exactly the level at which we should be scrutinizing the output of all LLMs in research.

AI absolutely has its place in the modern researcher’s toolkit. But until we systematically evaluate its strengths and weaknesses, we're rolling the dice every time we use it.

We'll be running a second round of my workshop in June as part of Rosenfeld Media’s Designing with AI conference (ticket prices go up tomorrow; register with code PAINE-DWAI2025 for a discount). Or, to hear about other upcoming workshops and events from me, sign up for my mailing list.