Proud consultant moment: My AI+UXR workshop attendees discovered their own novel problems with LLM-based synthesis

A highlight of last week’s AI+UXR workshop: seeing participants discover their own novel issues with AI synthesis for user research.

On Friday we re-ran my >30-person AI synthesis comparison exercise. The idea is this:

We prompt the large language model (LLM) to generate themes and supporting quotes for a standard set of interview transcripts

We run the exact same prompt 30+ times

We analyze the output for errors and overall effectiveness

We used a different model this time (Claude 3.5 Sonnet 2024-06-20) and observed different problems (more on that in a future post).

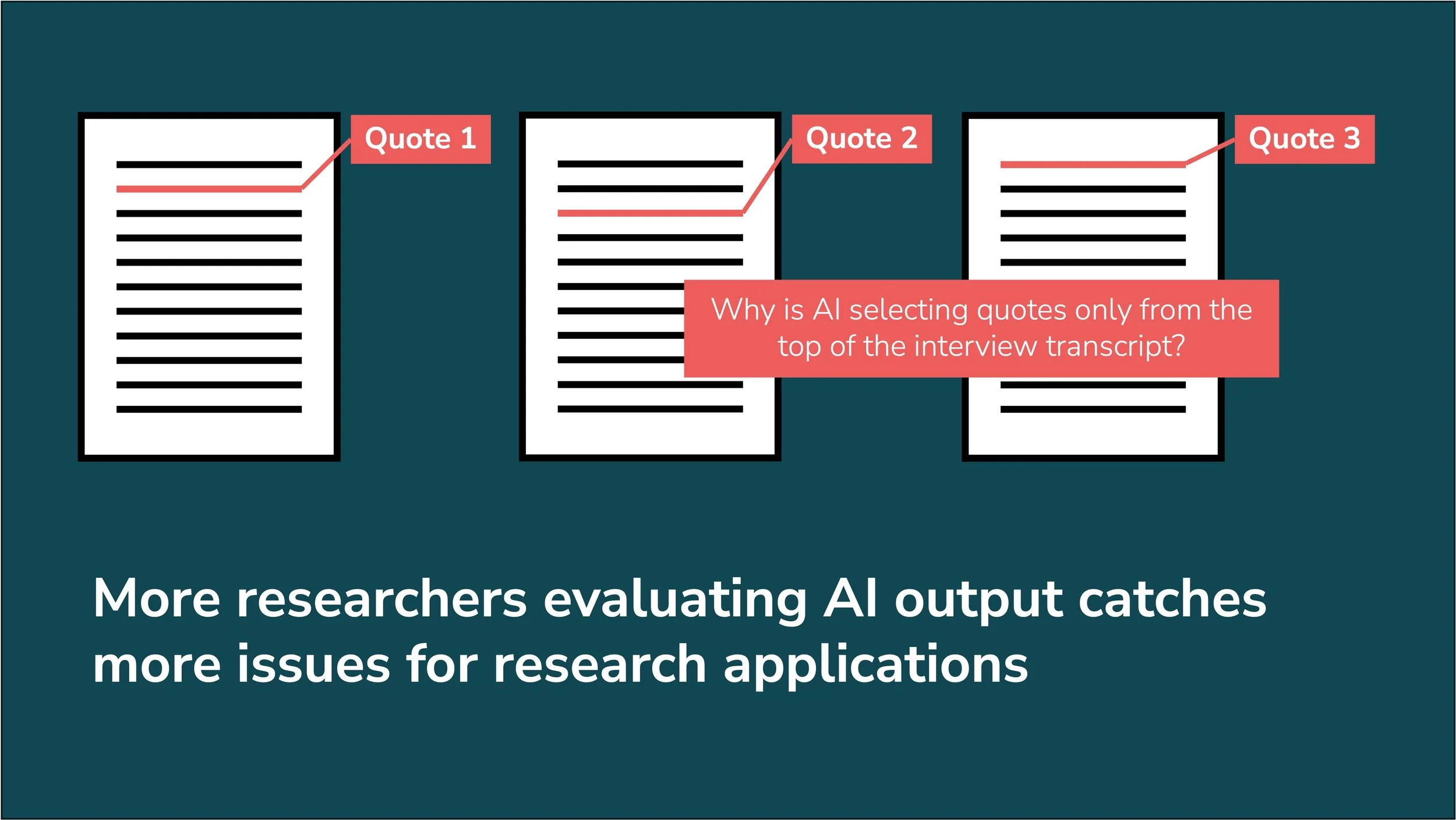

But in the course of analyzing her output, one of my participants discovered an issue I hadn’t recognized in any of my own pre-tests: the LLM was only citing quotes from the first quarter of her transcripts, reflecting a potential bias in how the LLM was surfacing supporting evidence.

This raises some important questions:

Is this a systematic bias, or was it just a coincidence in this one run?

Are there ways we could adjust the prompt to ensure more even coverage?

Had this been a real study, would this have caused bias in the team’s decision-making as a result?

I don’t know! (Without running more tests, at least.)

But it illustrates importance of having multiple sets of eyes on these kinds of tests. I’ve run this exact same prompt and dataset hundreds of times. I evaluate the output on metrics gathered from an extensive academic lit review, as well as our own tests.

But it takes more perspectives, more experiments, and more datasets to uncover all the issues.

No one person can do it alone!

It’s only by running controlled experiments and sharing our results (especially the failures!) that we will gradually learn to apply these tools safely and effectively in our work. And a major focus of my workshops is helping researchers understand what we need to control to make these experiments effective.

I’m appreciative of all of my Rosenfeld Designing with AI workshop participants for bringing their own perspectives to this important problem. I’m eager to see what other valuable discoveries they’ll make as they bring a more critical AI lens into their own work culture.

To get updates from me on future workshops, sign up here.