Why you should use a fresh chat for each new AI research task

In my AI+UXR workshops, I recommend starting a fresh chat each time you ask the LLM to do a significant task. Why?

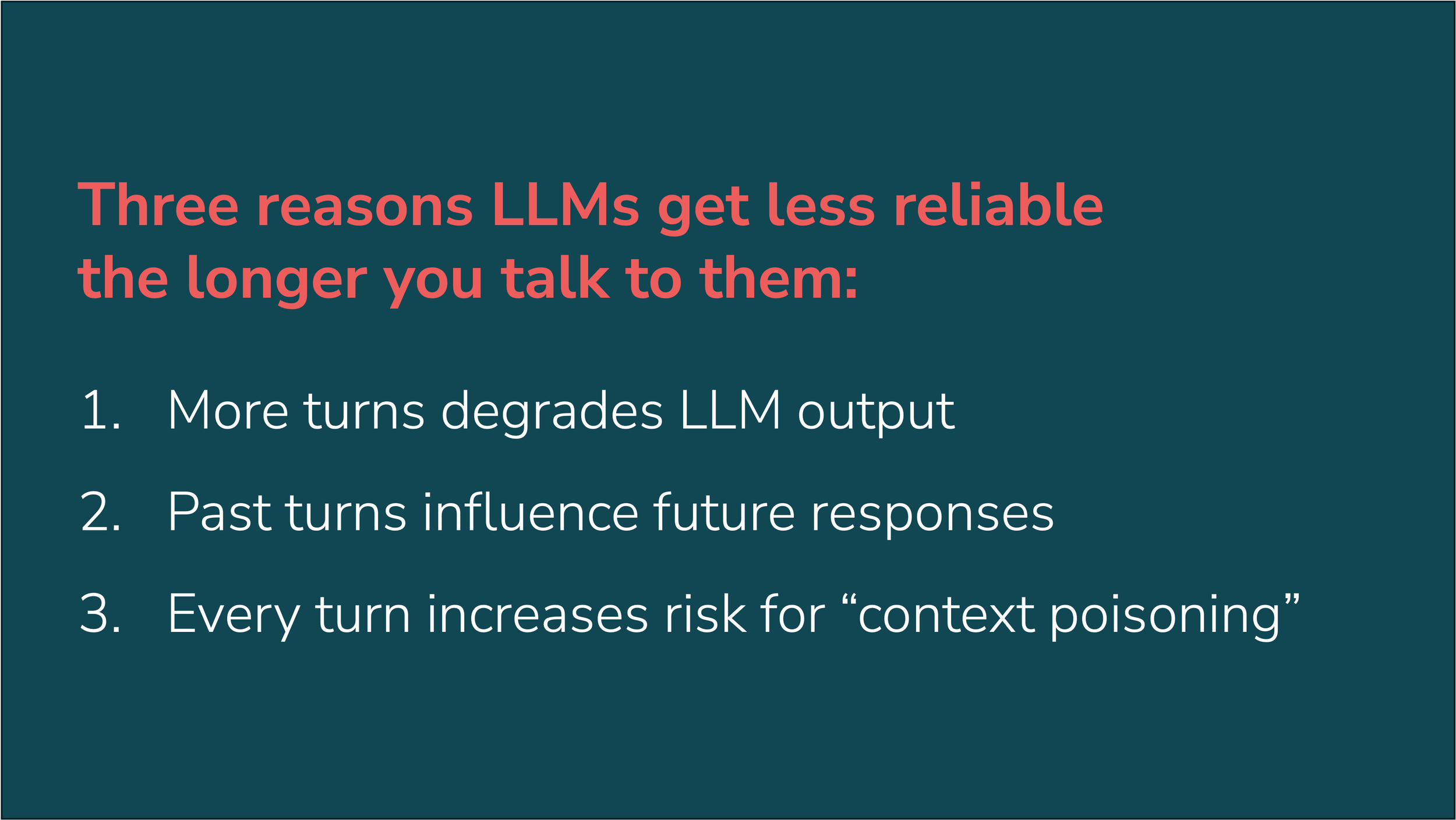

Because UX research tools need to be reliable, and the more you talk to the LLM, the more that reliability takes a hit. This can introduce unknown errors.

This happens for several reasons, but here are a few big (albeit interrelated) ones:

1️⃣ LLMs can get lost even in short multi-turn conversations

According to recent research from Microsoft and Salesforce, providing instructions over multiple turns (vs. all at once upfront) can dramatically degrade the output of LLMs. This is true even for reasoning models like o3 and Deepseek-R1, which “deteriorate in similar ways.”

2️⃣ Past turns influence how the LLM weights different concepts

In the workshop, I show a conversation that continuously, subtly references safaris, until the LLM takes a hard turn and generates content with a giraffe in it. Every token influences future tokens, and repeated concepts (even inadvertent ones) can “prime” the model to produce unexpected output.

3️⃣ Every turn is an opportunity for “context poisoning”

“Context poisoning” is when inaccurate, irrelevant, or hallucinated information gets into the LLM context, causing misleading results or deviation from instructions. This is sometimes exploited to jailbreak LLMs, but it can happen unintentionally as well. In simple terms, bad assumptions early on are hard to recover from.

To avoid these issues, I recommend:

Starting the conversation from scratch any time you’re doing an important research task (including turning off memory and custom instructions)

Using a single well-structured prompt when possible

And always, testing carefully and being alert to errors in LLM output

I talk about these issues (and a lot more) in my workshops, and I’m writing about this today because the question was asked by some of my amazing workshop participants.

Sign up to get notified about my next public workshop–or if you’re looking for private, in-house training for your team, drop me a note!