In a recent interview about Designing with AI 2025, I discussed the top AI skills for UX professionals in 2025 and who to learn from.

Read MoreIn my AI + UXR workshop, I teach attendees to check the subprocessors of AI tools to understand what models they’re using under the hood. So it was delightful validation to see Simon Willison mention in a May 11 post that he’s started to do the same thing, and highlights the importance of sharing our learnings in public.

Read MoreHow are you evaluating your AI tools? This is the question UX and product leaders should be asking as they choose AI training and partnerships for their teams, which I recently discussed in an interview.

Read MoreWhen you talk with UX practitioners about AI, one of their biggest concerns is "grift." They want to know where AI is actually useful and avoid buying into the hype of people who are only in it to make a sale. I talked about this in a recent interview.

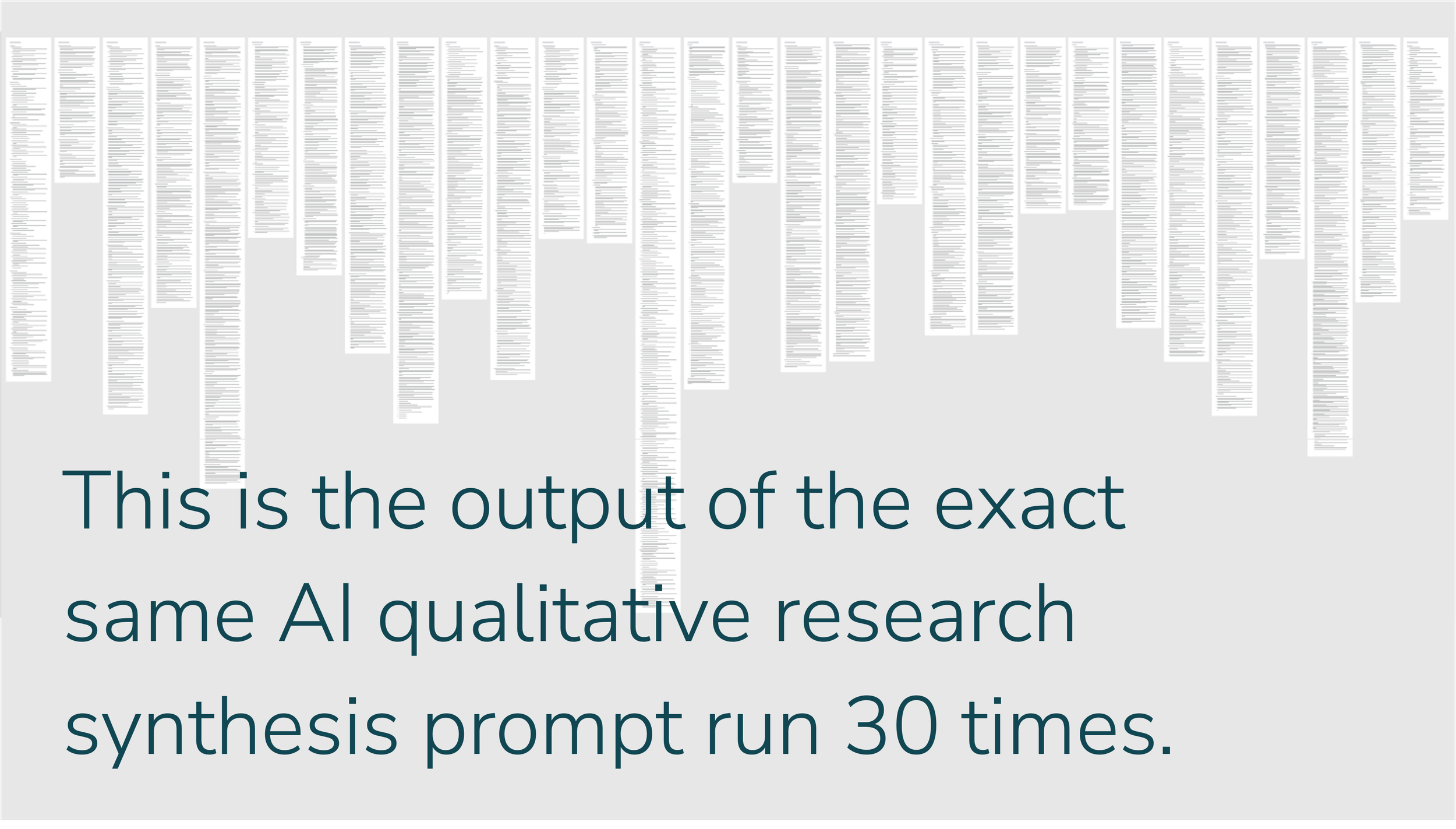

Read MoreI invited 31 researchers to test AI research synthesis by running the exact same prompt. They learned LLM analysis is overhyped, but evaluating it is something you can do yourself.

Read MoreWhen we selected our speakers for Designing with AI 2025, we were looking for two things: creativity and critical thinking. And they have truly delivered.

Read MoreIt's easy to fall into the trap of confusing "output" with "impact." But as a researcher in a world of AI tools, that's a good way to devalue your work.

Read MoreAs more designers, PMs, founders, and researchers themselves begin to use generative AI tools for research synthesis, it has many researchers wondering whether it's good enough to replace their work. The short answer is it's a lot like using AI for anything else: AI output is "adequate."

Read MoreAI is great at producing generic, average output. Average work may be perfectly fine to keep bringing home a paycheck, but if you don’t value it yourself, it will not be valued by others in the long term.

Read MoreFrom Seth Godin: "Once we see a pattern of AI getting tasks right, we're inclined to trust it more and more, verifying less often and moving on to tasks that don't meet these standards.”

Read MoreShould user researchers prepare to move into the role of “orchestrating and validating the work of AI systems” like OpenAI’s Deep Research?

Read MoreWith renewed attention to smart glasses thanks to Meta’s Orion, spatial computing is once again on people’s radar. AI’s natural interface capabilities and multimodality are proving to be powerful, leverageable tools for bringing information technology into the physical world.

Read MoreAt Austin Tech Week 2024, companies from startups to Meta shared their design process for AI. These were the four principles they all agreed on.

Read MoreEmerging technology creates perplexing problems for user-centered design, such as: how do you take a user-centered approach when it’s too early to have a defined user? Does this mean you should throw out user-centered design, or choose your target user based solely on TAM?

Read MoreWith all the hype around AI, it’s hard to recognize what issues are attention-worthy. So last week’s Rosenfeld Media community workshop on AI was a valuable opportunity to see what questions are top-of-mind for the UX community.

Read MoreAI is a technology with rippling systems-level effects. Collaborating across diverse disciplines is the only way to begin to understand the full implications of our AI design decisions. This is the focus of an upcoming community workshop I'll be moderating on artificial intelligence.

Read MoreIn Rosenfeld Media’s upcoming Advancing Research community workshop on artificial intelligence, I’ll be talking with Rachael Dietkus, Nishanshi Shukla, and David Womack about what researchers and tech professionals can do to mitigate issues in AI tool use, from psychological harm to users, to damaging our knowledge bases.

Read MoreAnalysts argue we’re entering the “Trough of Disillusionment” for AI. That may be bad news for investors, but for product teams, it offers new opportunities to build products that solve more meaningful problems for their users.

Read More